Projects

View all projects from the Amsterdam University of Applied Sciences. Looking for a particular project? Click the magnifying glass at the top of the page to search.

M-DPP-Digital passports for traceable fashion

-The M-DPP project develops a digital product passport for textiles, based on molecular data, enabling fair, traceable, and circular fashion

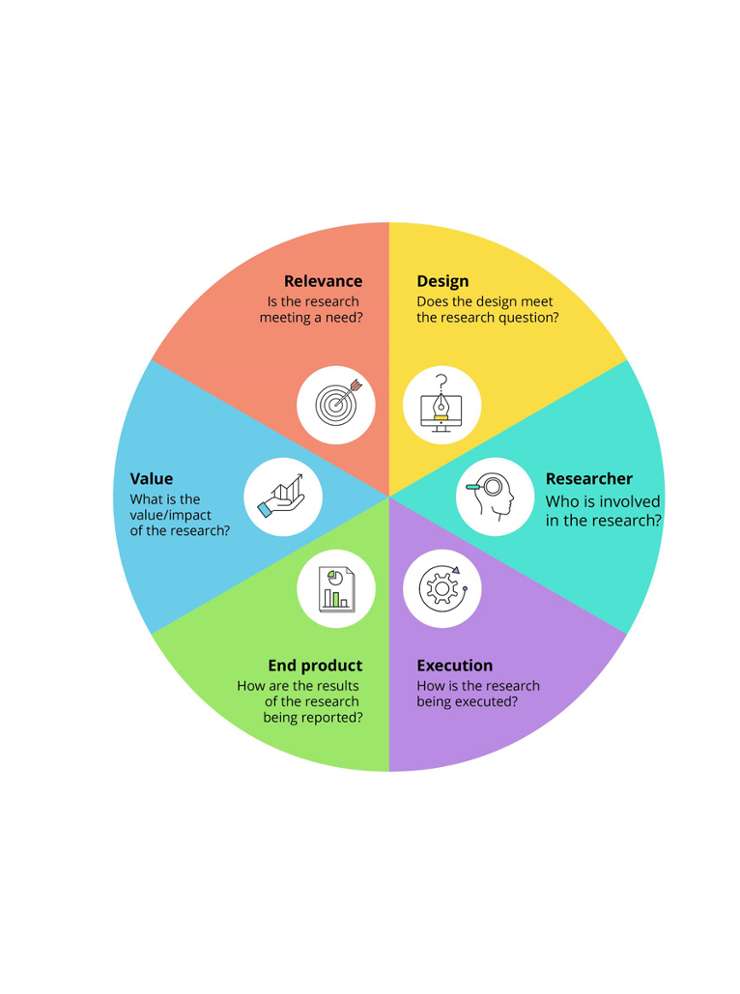

Creating the Desire for Change in Higher Education - Tools

-Tools and workshops for integrating research into education.

UrbanSWARM: Nature-Based Solutions for Circular and Future-Proof Cities

-UrbanSWARM activates underused urban spaces with high-impact nature-based solutions to tackle climate change, biodiversity loss, water issues and inequality.

Keeping it Local

-Researchers, students and creators are working together on a new fashion system that focuses on local production, co-creation and sustainable consumer behaviour

ReFan: Reshaping the Fashion Narrative

-Students, designers, researchers and entrepreneurs came together to create a new narrative about fashion.

Generative AI Agent(s) for policy officers

-The aim of this project is to investigate how the working methods of service designers change when they incorporate AI agents into their services.

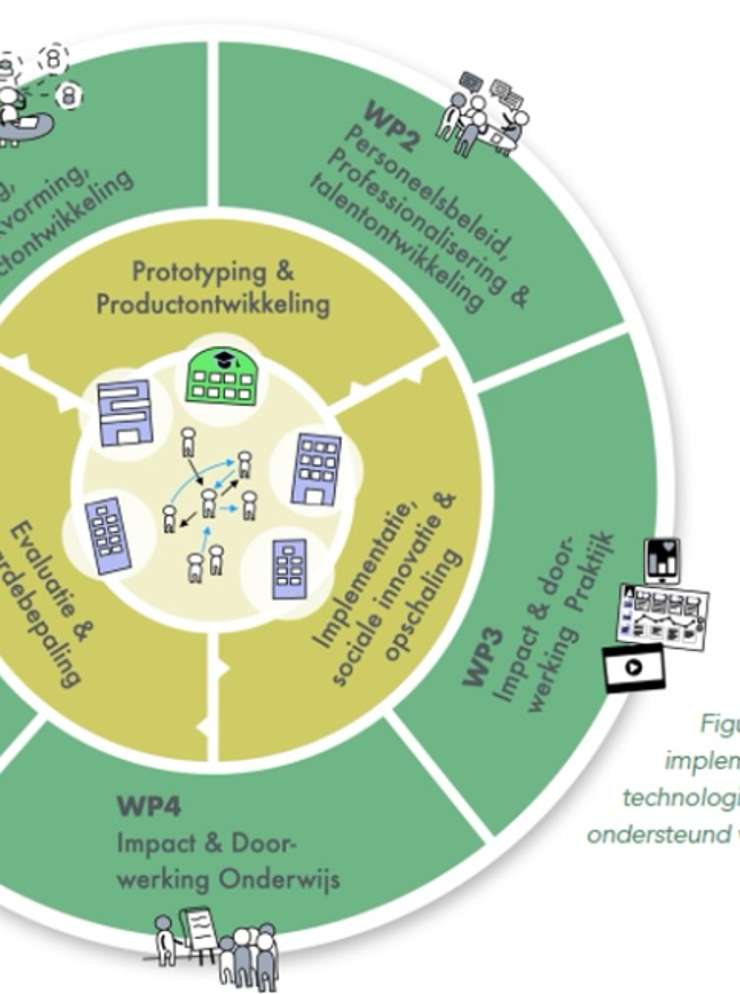

Spirit: Collaborating for person-centred care through integrated, robust, and innovative technology

-Within SPIRIT, research groups from eleven universities of applied sciences are working on how technology can contribute to humane, person-centred care.

Intercultural Chatbots: Using Generative AI to Enhance Intercultural Learning

-AUAS explores how AI chatbots can strengthen intercultural skills and prepare students for success in diverse, international study and work environments.

Care & Repair

-Extend the lifespan of clothing: 25% of the clothing in wardrobes is still technically intact, and two-thirds of discarded clothing is still perfectly wearable

Ground for Wellbeing

-This project was launched to make the soil in Amsterdam North more climate-resilient and to preserve Tuindorp Oostzaan for the future.

Fair fashion

-From integrating digital tools like AI and 3D printing into educational practices to fostering environmental responsibility, our project aimes ethical fashion.

U!Innovate: Empowering Climate and Neutral Innovations

-A project about turning applied education into action, giving students hands-on experience that prepares them not just to succeed as entrepreneurs.

What if AI...?

-Research methods to make frictionless design visible and open for discussion

Storytelling for Collective Care in our Neighborhoods

-Can participatory storytelling contribute to collective care in Amsterdam Nieuw-West?

Understanding Durability in Circular Business Models (DURBUS)

-What does 'durability' really mean in the textiles and apparel industry, and how can businesses integrate durability into their business models?

Urban Upcycling: High-quality reuse of residual flows

-The Netherlands aims to be fully circular by 2050. This requires, among other things, the high-quality reuse of valuable residual flows, also known as upcycling